1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

| import torch

import torch.nn as nn

from torch.utils.data import Dataset

from torch.utils.data.dataloader import DataLoader

import pandas as pd

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

class BostonHousingDataset(Dataset):

def __init__(self, filepath, is_test=False):

self.is_test = is_test

if is_test:

df = pd.read_csv(filepath)

self.IDs = df.iloc[:, 0]

self.x_data = torch.tensor(df.iloc[:, 1:].values, dtype=torch.float32)

self.len = self.x_data.shape[0]

else:

xy = pd.read_csv(filepath)

self.len = xy.shape[0]

self.x_data = torch.tensor(xy.iloc[:, 1:-1].values, dtype=torch.float32)

self.y_data = torch.tensor(xy.iloc[:, [-1]].values, dtype=torch.float32)

def __getitem__(self, index):

if not self.is_test:

return self.x_data[index], self.y_data[index]

else:

return self.IDs[index], self.x_data[index]

def __len__(self):

return self.len

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.fc1 = nn.Linear(13, 64)

self.fc2 = nn.Linear(64, 32)

self.fc3 = nn.Linear(32, 16)

self.fc4 = nn.Linear(16, 1)

self.relu = nn.ReLU()

self.sigmoid = nn.Sigmoid()

def forward(self, x):

x = self.sigmoid(self.fc1(x))

x = self.relu(self.fc2(x))

x = self.relu(self.fc3(x))

return self.fc4(x)

dataset = BostonHousingDataset('train.csv')

train_loader = DataLoader(dataset=dataset,

batch_size=32,

shuffle=True,

num_workers=0)

model = Model().to(device)

criterion = nn.MSELoss(reduction='mean')

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

def train(epoch):

model.train()

for i, data in enumerate(train_loader, 0):

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

outputs = model(inputs)

loss = criterion(outputs, labels.view(-1, 1))

optimizer.zero_grad()

loss.backward()

optimizer.step()

print(f"Epoch {epoch + 1}/{epochs}, Loss: {loss}")

if __name__ == '__main__':

epochs = 100

for epoch in range(epochs):

train(epoch)

test_dataset = BostonHousingDataset('test.csv', True)

test_loader = DataLoader(dataset=test_dataset,

batch_size=1,

shuffle=False)

model.eval()

predictions = []

IDs = []

with torch.no_grad():

for ID, inputs in test_loader:

inputs = inputs.to(device)

output = model(inputs)

predictions.append(output.item())

IDs.append(ID.item())

submission = pd.DataFrame({'ID': IDs, 'medv': predictions})

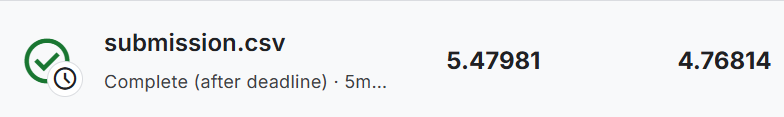

submission.to_csv('submission.csv', index=False)

|